译:长上下文为何会失效

原文: https://www.dbreunig.com/2025/06/22/how-contexts-fail-and-how-to-fix-them.html

作者: D. B. Reunig

译者: Gemini 2.5 Pro

管理好 Context 是 Agent 成功的关键

As frontier model context windows continue to grow1, with many supporting up to 1 million tokens, I see many excited discussions about how long context windows will unlock the agents of our dreams. After all, with a large enough window, you can simply throw everything into a prompt you might need – tools, documents, instructions, and more – and let the model take care of the rest.

随着前沿模型的 context 窗口不断扩大1,许多模型已支持高达 100 万 token,我看到很多激动人心的讨论,关于长 context 窗口将如何解锁我们梦想中的 agent。毕竟,只要窗口足够大,你就可以把可能需要的一切——工具、文档、指令等等——都扔进一个 prompt 里,然后让模型处理剩下的事情。

Long contexts kneecapped RAG enthusiasm (no need to find the best doc when you can fit it all in the prompt!), enabled MCP hype (connect to every tool and models can do any job!), and fueled enthusiasm for agents2.

长 context 的出现削弱了 RAG 的热度(既然能把所有文档都放进 prompt,何必费心去寻找最相关的那一篇呢!),助长了 MCP 的炒作(连接所有工具,模型就能做任何事!),也点燃了人们对 agent 的热情2。

But in reality, longer contexts do not generate better responses. Overloading your context can cause your agents and applications to fail in suprising ways. Contexts can become poisoned, distracting, confusing, or conflicting. This is especially problematic for agents, which rely on context to gather information, synthesize findings, and coordinate actions.

但实际上,更长的 context 并不会带来更好的响应。给 context 加载过多内容,可能会让你的 agent 和应用以意想不到的方式失败。Context 可能会被投毒、被干扰、被混淆或产生冲突。这对 agent 来说尤其成问题,因为 agent 正是依赖 context 来收集信息、综合发现和协调行动的。

Let’s run through the ways contexts can get out of hand, then review methods to mitigate or entirely avoid context fails.

我们来梳理一下 context 是如何失控的,然后探讨如何减轻或完全避免这些问题。

Context Fails

Context Poisoning (上下文投毒)

Context Poisoning is when a hallucination or other error makes it into the context, where it is repeatedly referenced.

Context Poisoning 是指幻觉或其他错误信息进入了 context,并被反复引用。

The Deep Mind team called out context poisoning in the Gemini 2.5 technical report, which we broke down last week. When playing Pokémon, the Gemini agent would occasionally hallucinate while playing, poisoning its context:

DeepMind 团队在 Gemini 2.5 技术报告中指出了 context 投毒的问题,我们上周也分析过这篇文章。在玩《精灵宝可梦》时,Gemini agent 偶尔会产生幻觉,从而毒化了它的 context:

An especially egregious form of this issue can take place with “context poisoning” – where many parts of the context (goals, summary) are “poisoned” with misinformation about the game state, which can often take a very long time to undo. As a result, the model can become fixated on achieving impossible or irrelevant goals.

这个问题有一种特别严重的形式,就是“context 投毒”——context 的许多部分(如目标、摘要)被关于游戏状态的错误信息“毒化”,而这往往需要很长时间才能纠正。结果,模型可能会固执地追求一些不可能或不相关的目标。

If the “goals” section of its context was poisoned, the agent would develop nonsensical strategies and repeat behaviors in pursuit of a goal that cannot be met.

如果 context 中的“目标”部分被投毒,agent 就会制定出荒谬的策略,并为了一个无法实现的目标而重复某些行为。

Context Distraction (上下文干扰)

Context Distraction is when a context grows so long that the model over-focuses on the context, neglecting what it learned during training.

Context Distraction 是指 context 变得过长,导致模型过度关注 context 本身,而忽略了它在训练中学到的知识。

As context grows during an agentic workflow—as the model gathers more information and builds up history—this accumulated context can become distracting rather than helpful. The Pokémon-playing Gemini agent demonstrated this problem clearly:

在 agent 工作流中,随着模型收集更多信息、积累更多历史,context 会不断增长——这个累积的 context 可能会变得起干扰作用,而非有益。玩《精灵宝可梦》的 Gemini agent 清楚地展示了这个问题:

While Gemini 2.5 Pro supports 1M+ token context, making effective use of it for agents presents

a new research frontier. In this agentic setup, it was observed that as the context grew significantly beyond 100k tokens, the agent showed a tendency toward favoring repeating actions from its vast history rather than synthesizing novel plans. This phenomenon, albeit anecdotal, highlights an important distinction between long-context for retrieval and long-context for multi-step, generative reasoning.

虽然 Gemini 2.5 Pro 支持 1M+ token 的 context,但如何有效地将其用于 agent 仍是一个新的研究前沿。在这个 agent 设置中,我们观察到,当 context 显著超过 100k token 时,agent 表现出一种倾向,更喜欢重复其庞大历史记录中的动作,而不是综合信息制定新的计划。这一现象虽然只是个例,但它突显了用于信息检索的长 context 和用于多步生成式推理的长 context 之间的重要区别。

Instead of using its training to develop new strategies, the agent became fixated on repeating past actions from its extensive context history.

Agent 不再利用其训练成果来制定新策略,而是固执地重复其庞大 context 历史中的旧行为。

For smaller models, the distraction ceiling is much lower. A Databricks study found that model correctness began to fall around 32k for Llama 3.1 405b and earlier for smaller models.

对于更小的模型,这个干扰的上限要低得多。一项 Databricks 的研究发现,对于 Llama 3.1 405b,模型准确性在 32k token 左右开始下降,而对于更小的模型,这个临界点来得更早。

If models start to misbehave long before their context windows are filled, what’s the point of super large context windows? In a nutshell: summarization3 and fact retrieval. If you’re not doing either of those, be wary of your chosen model’s distraction ceiling.

如果模型在 context 窗口远未填满时就开始出问题,那么超大 context 窗口的意义何在?简而言之:用于摘要3和事实检索。如果你不是在做这两件事,就要小心你所选模型的干扰上限。

Context Confusion (上下文混淆)

Context Confusion is when superfluous content in the context is used by the model to generate a low-quality response.

Context Confusion 是指 context 中无关紧要的内容被模型用来生成了低质量的响应。

For a minute there, it really seemed like everyone was going to ship an MCP. The dream of a powerful model, connected to all your services and stuff, doing all your mundane tasks felt within reach. Just throw all the tool descriptions into the prompt and hit go. Claude’s system prompt showed us the way, as it’s mostly tool definitions or instructions for using tools.

有一阵子,似乎每个人都准备推出一个 MCP。一个强大的模型,连接你所有的服务和东西,帮你处理所有杂事的梦想,感觉触手可及。只需把所有工具的描述都扔进 prompt,然后运行即可。Claude 的系统提示为我们指明了方向,因为它的内容大部分是工具定义或使用说明。

But even if consolidation and competition don’t slow MCPs, Context Confusion will. It turns out there can be such a thing as too many tools.

但即使整合与竞争没有减缓 MCP 的发展,Context Confusion 也会。事实证明,工具真的可能太多了。

The Berkeley Function-Calling Leaderboard is a tool-use benchmark that evaluates the ability of models to effectively use tools to respond to prompts. Now on its 3rd version, the leaderboard shows that every model performs worse when provided with more than one tool4. Further, the Berkeley team, “designed scenarios where none of the provided functions are relevant…we expect the model’s output to be no function call.” Yet, all models will occasionally call tools that aren’t relevant.

伯克利函数调用排行榜是一个评估模型使用工具响应 prompt 能力的基准测试。现在更新到第 3 版,排行榜显示,当提供不止一个工具时,每个模型的表现都会变差4。此外,伯克利团队“设计了一些场景,其中提供的所有函数都不相关……我们期望模型的输出是不进行任何函数调用。”然而,所有模型都偶尔会调用不相关的工具。

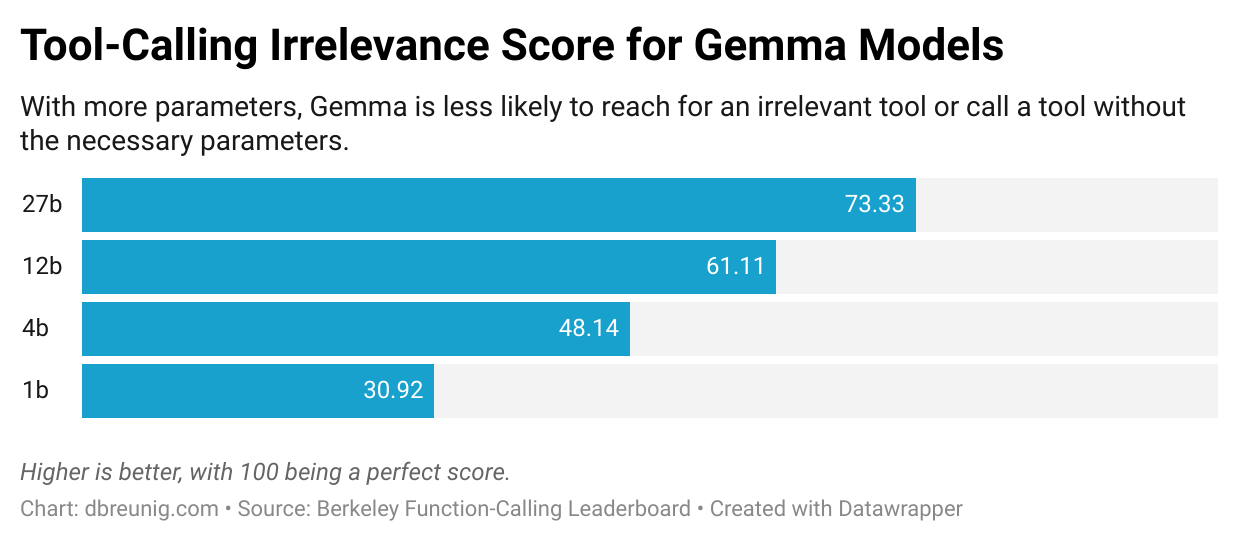

Browsing the function-calling leaderboard, you can see the problem get worse as the models get smaller:

浏览函数调用排行榜,你会发现随着模型变小,这个问题变得更加严重:

A striking example of context confusion can be seen in a recent paper which evaluated small model performance on the GeoEngine benchmark, a trial that features 46 different tools. When the team gave a quantized (compressed) Llama 3.1 8b a query with all 46 tools it failed, even though the context was well within the 16k context window. But when they only gave the model 19 tools, it succeeded.

一个关于 context 混淆的显著例子出现在最近的一篇论文中,该论文评估了小模型在 GeoEngine 基准测试上的表现,该测试包含多达 46 种不同的工具。当团队给一个量化(压缩)的 Llama 3.1 8b 模型一个包含全部 46 个工具的查询时,它失败了,尽管 context 远未达到 16k 的窗口上限。但当他们只给模型 19 个工具时,它却成功了。

The problem is: if you put something in the context the model has to pay attention to it. It may be irrelevant information or needless tool definitions, but the model will take it into account. Large models, especially reasoning models, are getting better at ignoring or discarding superfluous context, but we continually see worthless information trip up agents. Longer contexts let us stuff in more info, but this ability comes with downsides.

问题在于:如果你把某些东西放进 context,模型就必须关注它。这可能是无关紧要的信息或多余的工具定义,但模型会将其纳入考量。大型模型,特别是推理模型,在忽略或丢弃多余 context 方面正变得越来越好,但我们仍然不断看到无用的信息绊倒 agent。更长的 context 让我们能塞进更多信息,但这种能力也带来了负面影响。

Context Clash (上下文冲突)

Context Clash is when you accrue new information and tools in your context that conflicts with other information in the context.

Context Clash 是指你在 context 中累积的新信息和工具与 context 中已有的其他信息发生冲突。

This is a more problematic version of Context Confusion: the bad context here isn’t irrelevant, it directly conflicts with other information in the prompt.

这是 Context Confusion 的一个更麻烦的版本:这里的坏 context 不是无关紧要,而是与 prompt 中的其他信息直接冲突。

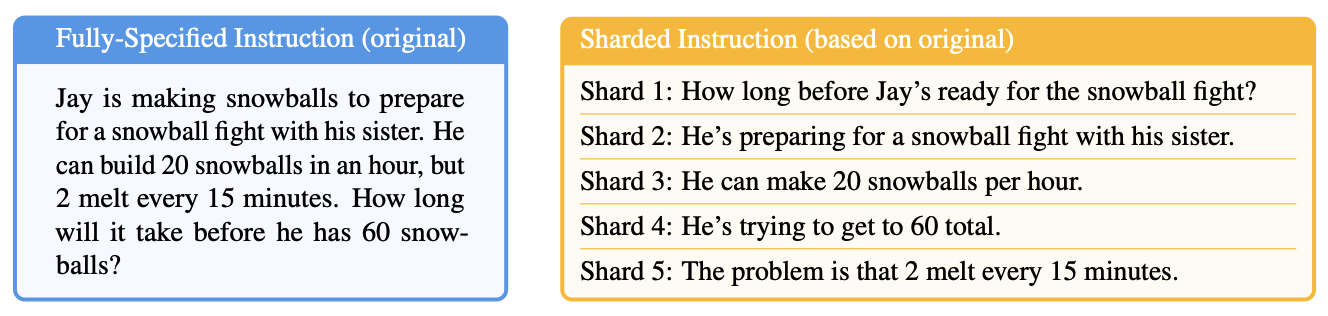

A Microsoft and Salesforce team documented this brilliantly in a recent paper. The team took prompts from multiple benchmarks and ‘sharded’ their information across multiple prompts. Think of it this way: sometimes, you might sit down and type paragraphs into ChatGPT or Claude before you hit enter, considering every necessary detail. Other times, you might start with a simple prompt, then add further details when the chatbot’s answer isn’t satisfactory. The Microsoft/Salesforce team modified benchmark prompts to look like these multistep exchanges:

一个微软和 Salesforce 的团队在最近的一篇论文中出色地记录了这一点。该团队从多个基准测试中提取 prompt,并将其信息“分片”到多个 prompt 中。可以这样理解:有时,你可能会在点击发送前,在 ChatGPT 或 Claude 中输入大段文字,考虑所有必要的细节。而其他时候,你可能从一个简单的 prompt 开始,然后在聊天机器人的回答不尽人意时再补充更多细节。这个微软/Salesforce 团队修改了基准 prompt,使其看起来像这些多步对话:

All the information from the prompt on the left side is contained within the several messages on the right side, which would be played out in multiple chat rounds.

左边 prompt 中的所有信息都包含在右边的几条消息中,这些消息会在多轮聊天中依次给出。

The sharded prompts yielded dramatically worse results, with an average drop of 39%. And the team tested a range of models – OpenAI’s vaunted o3’s score dropped from 98.1 to 64.1.

分片式 prompt 得到的结果要差得多,平均下降了 39%。该团队测试了一系列模型——OpenAI 备受赞誉的 o3 模型得分从 98.1 降至 64.1。

What’s going on? Why are models performing worse if information is gathered in stages rather than all at once?

发生了什么?为什么分阶段收集信息比一次性提供所有信息时,模型的表现更差?

The answer is Context Confusion: the assembled context, containing the entirety of the chat exchange, contains early attempts by the model to answer the challenge before it has all the information. These incorrect answers remain present in the context and influence the model when it generates its final answer. The team writes:

答案还是 Context Confusion:组装起来的 context 包含了整个聊天对话,其中也包括了模型在获得全部信息之前为解决问题所做的早期尝试。这些不正确的答案留在了 context 中,并在模型生成最终答案时对其产生影响。该团队写道:

We find that LLMs often make assumptions in early turns and prematurely attempt to generate final solutions, on which they overly rely. In simpler terms, we discover that when LLMs take a wrong turn in a conversation, they get lost and do not recover.

我们发现,LLM 常常在早期对话中做出假设,并过早地尝试生成最终解决方案,然后又过度依赖这些早期的方案。简单来说,我们发现当 LLM 在对话中走错一步时,它们就会迷失方向,无法恢复。

This does not bode well for agent builders. Agents assemble context from documents, tool calls, and from other models tasked with subproblems. All of this context, pulled from diverse sources, has the potential to disagree with itself. Further, when you connect to MCP tools you didn’t create there’s a greater chance their descriptions and instructions clash with the rest of your prompt.

这对 agent 的构建者来说不是个好兆头。Agent 从文档、工具调用以及负责子任务的其他模型中组装 context。所有这些来自不同来源的 context 都有可能自相矛盾。此外,当你连接到非自己创建的 MCP 工具时,它们的描述和指令与你 prompt 的其余部分发生冲突的可能性更大。

The arrival of million-token context windows felt transformative. The ability to throw everything an agent might need into the prompt inspired visions of superintelligent assistants that could access any document, connect to every tool, and maintain perfect memory.

百万级 token context 窗口的到来感觉像是一场变革。能够将 agent 可能需要的一切都扔进 prompt 的能力,激发了人们对超级智能助手的想象——它们可以访问任何文档,连接所有工具,并保持完美的记忆。

But as we’ve seen, bigger contexts create new failure modes. Context poisoning embeds errors that compound over time. Context distraction causes agents to lean heavily on their context and repeat past actions rather than push forward. Context confusion leads to irrelevant tool or document usage. Context clash creates internal contradictions that derail reasoning.

但正如我们所见,更大的 context 带来了新的失效模式。Context 投毒会植入错误,并随时间推移而放大。Context 干扰导致 agent 过度依赖其 context,重复过去的行为而不是向前推进。Context 混淆导致使用不相关的工具或文档。Context 冲突则产生内部矛盾,使推理偏离轨道。

These failures hit agents hardest because agents operate in exactly the scenarios where contexts balloon: gathering information from multiple sources, making sequential tool calls, engaging in multi-turn reasoning, and accumulating extensive histories.

这些失效对 agent 的打击最为严重,因为 agent 正是运行在那些 context 会急剧膨胀的场景中:从多个来源收集信息、进行连续的工具调用、参与多轮推理,以及积累大量的历史记录。

Fortunately, there are solutions! In an upcoming post we’ll cover techniques for mitigating or avoding these issues, from methods for dynamically loading tools to spinning up context quarantines.

幸运的是,我们有解决方案!在下一篇文章中,我们将介绍减轻或避免这些问题的技巧,从动态加载工具的方法到建立 context 隔离区等。

Read the follow up article, “How to Fix Your Context“

阅读后续文章:“如何修复你的 Context”

- Gemini 2.5 and GPT-4.1 have 1 million token context windows, large enough to throw Infinite Jest in there, with plenty of room to spare. ↩

- The “Long form text” section in the Gemini docs sum up this optmism nicely. ↩

- In fact, in the Databricks study cited above, a frequent way models would fail when given long contexts is they’d return summarizations of the provided context, while ignoring any instructions contained within the prompt. ↩

- If you’re on the leaderboard, pay attention to the, “Live (AST)” columns. These metrics use real-world tool definitions contributed to the product by enterprise, “avoiding the drawbacks of dataset contamination and biased benchmarks.” ↩