译:你的 AI 产品需要 Evals

原文: https://hamel.dev/blog/posts/evals/

作者: Hamel Husain

译者: Gemini 2.5 Pro

目录

动机

I started working with language models five years ago when I led the team that created CodeSearchNet, a precursor to GitHub CoPilot. Since then, I’ve seen many successful and unsuccessful approaches to building LLM products. I’ve found that unsuccessful products almost always share a common root cause: a failure to create robust evaluation systems.

我从五年前开始接触语言模型,当时我领导团队创建了 CodeSearchNet,它是 GitHub CoPilot 的前身。从那时起,我见证了许多构建 LLM 产品的成功与失败。我发现,失败的产品几乎总有一个共同的根本原因:没能建立起一套强大的评估体系。

I’m currently an independent consultant who helps companies build domain-specific AI products. I hope companies can save thousands of dollars in consulting fees by reading this post carefully. As much as I love making money, I hate seeing folks make the same mistake repeatedly.

我目前是一名独立顾问,帮助公司构建特定领域的 AI 产品。我希望公司能通过仔细阅读这篇文章,省下数千美元的咨询费。尽管我喜欢赚钱,但我讨厌看到大家一遍遍地犯同样的错误。

This post outlines my thoughts on building evaluation systems for LLMs-powered AI products.

这篇文章概述了我对如何为 LLM 驱动的 AI 产品构建评估体系的一些想法。

快速迭代 == 成功

Like software engineering, success with AI hinges on how fast you can iterate. You must have processes and tools for:

- Evaluating quality (ex: tests).

- Debugging issues (ex: logging & inspecting data).

- Changing the behavior or the system (prompt eng, fine-tuning, writing code)

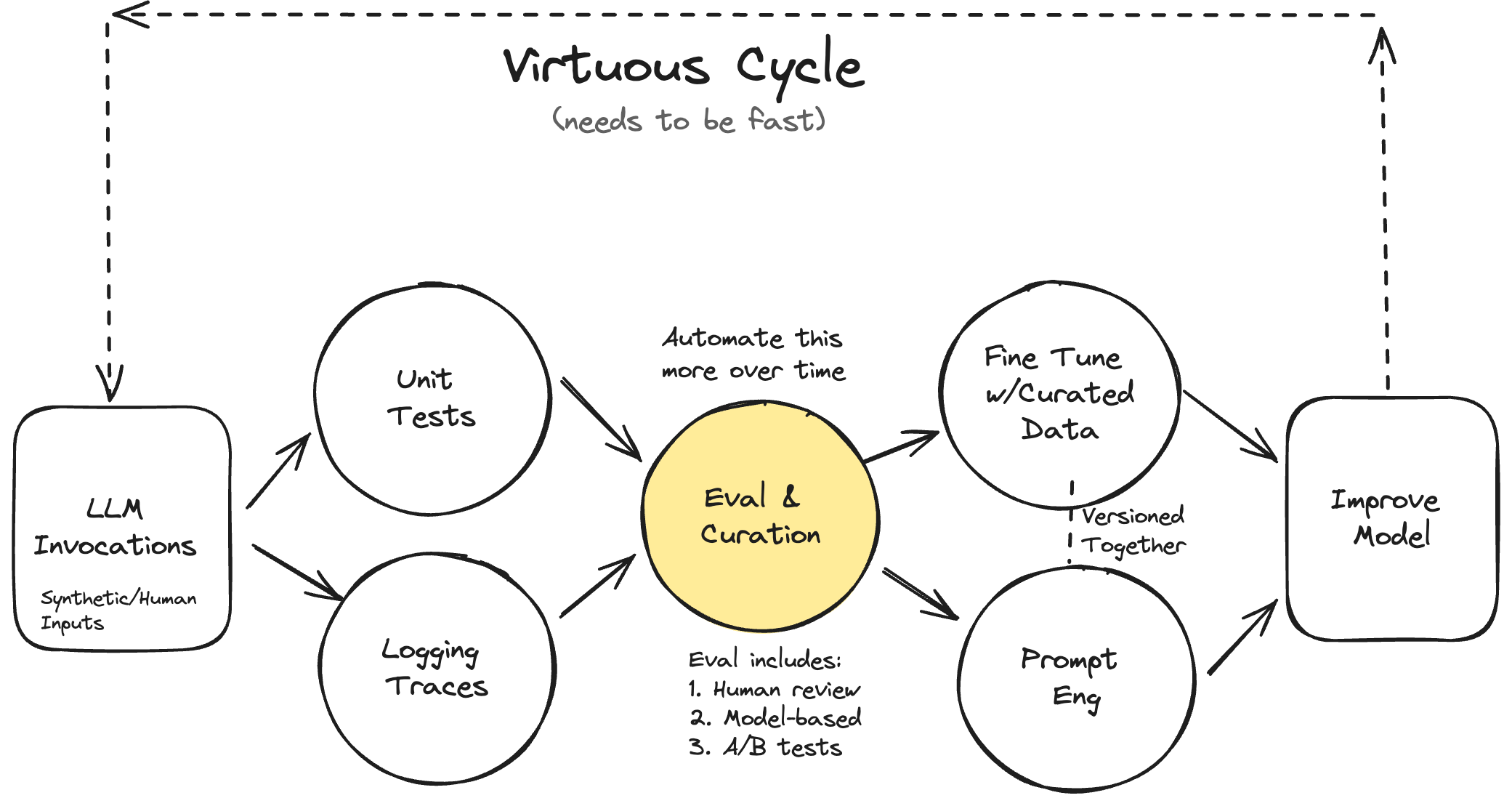

Many people focus exclusively on #3 above, which prevents them from improving their LLM products beyond a demo.1 Doing all three activities well creates a virtuous cycle differentiating great from mediocre AI products (see the diagram below for a visualization of this cycle).

和软件工程一样,AI 的成功取决于你迭代的速度有多快。你必须拥有相应的流程和工具来:

- 评估质量(例如:测试)。

- 调试问题(例如:记录和检查数据)。

- 改变系统行为(例如:prompt engineering、fine-tuning、编写代码)。

许多人只关注上面的第 3 点,这让他们无法将自己的 LLM 产品从一个 demo 提升到更高水平。1 把这三件事都做好,会形成一个良性循环,这也是优秀 AI 产品与平庸之作的区别所在(下图展示了这个循环)。

If you streamline your evaluation process, all other activities become easy. This is very similar to how tests in software engineering pay massive dividends in the long term despite requiring up-front investment.

如果你能简化评估流程,所有其他工作都会变得容易。这和软件工程中的测试非常相似,虽然前期需要投入,但长期来看会带来巨大的回报。

To ground this post in a real-world situation, I’ll walk through a case study in which we built a system for rapid improvement. I’ll primarily focus on evaluation as that is the most critical component.

为了让这篇文章更贴近现实,我将通过一个案例研究来逐步说明我们如何构建一个用于快速改进的系统。我将主要关注评估,因为它是最关键的部分。

案例研究:房地产 AI 助理 Lucy

Rechat is a SaaS application that allows real estate professionals to perform various tasks, such as managing contracts, searching for listings, building creative assets, managing appointments, and more. The thesis of Rechat is that you can do everything in one place rather than having to context switch between many different tools.

Rechat 是一个 SaaS 应用,让房地产专业人士能够执行各种任务,比如管理合同、搜索房源、创建营销材料、管理日程等等。Rechat 的核心理念是,你可以在一个地方完成所有工作,而无需在多个不同工具之间来回切换。

Rechat’s AI assistant, Lucy, is a canonical AI product: a conversational interface that obviates the need to click, type, and navigate the software. During Lucy’s beginning stages, rapid progress was made with prompt engineering. However, as Lucy’s surface area expanded, the performance of the AI plateaued. Symptoms of this were:

- Addressing one failure mode led to the emergence of others, resembling a game of whack-a-mole.

- There was limited visibility into the AI system’s effectiveness across tasks beyond vibe checks.

- Prompts expanded into long and unwieldy forms, attempting to cover numerous edge cases and examples.

Rechat 的 AI 助理 Lucy 是一个典型的 AI 产品:一个对话式界面,省去了用户点击、输入和浏览软件的麻烦。在 Lucy 的早期阶段,通过 prompt engineering 取得了快速进展。然而,随着 Lucy 功能的扩展,AI 的性能开始停滞不前。其症状包括:

- 解决一个失败模式,又会引发其他问题,就像在玩“打地鼠”游戏。

- 除了凭感觉,我们很难了解 AI 系统在各项任务上的实际效果。

- Prompt 变得越来越长、越来越臃肿,试图覆盖大量的边缘案例和示例。

问题:如何系统地改进 AI?

To break through this plateau, we created a systematic approach to improving Lucy centered on evaluation. Our approach is illustrated by the diagram below.

为了突破这个瓶颈,我们创建了一套以评估为中心的系统化方法来改进 Lucy。我们的方法如下图所示。

This diagram is a best-faith effort to illustrate my mental model for improving AI systems. In reality, the process is non-linear and can take on many different forms that may or may not look like this diagram.

这张图是我尽最大努力画出来的,用来展示我改进 AI 系统的思维模型。在现实中,这个过程是非线性的,可能会呈现出多种不同的形式,不一定和图上完全一样。

I discuss the various components of this system in the context of evaluation below.

下面我将在评估的背景下,讨论这个系统的各个组成部分。

评估的类型

Rigorous and systematic evaluation is the most important part of the whole system. That is why “Eval and Curation” is highlighted in yellow at the center of the diagram. You should spend most of your time making your evaluation more robust and streamlined.

严谨和系统化的评估是整个体系中最重要的部分。这就是为什么在图的中心,“评估与筛选”(Eval and Curation)被用黄色高亮显示。你应该把大部分时间花在让你的评估体系变得更强大、更流畅上。

There are three levels of evaluation to consider:

- Level 1: Unit Tests

- Level 2: Model & Human Eval (this includes debugging)

- Level 3: A/B testing

The cost of Level 3 > Level 2 > Level 1. This dictates the cadence and manner you execute them. For example, I often run Level 1 evals on every code change, Level 2 on a set cadence and Level 3 only after significant product changes. It’s also helpful to conquer a good portion of your Level 1 tests before you move into model-based tests, as they require more work and time to execute.

你需要考虑三个层级的评估:

- Level 1: 单元测试

- Level 2: 模型和人工评估(包括调试)

- Level 3: A/B 测试

成本上,Level 3 > Level 2 > Level 1。这决定了你执行它们的频率和方式。例如,我通常在每次代码变更时都运行 Level 1 评估,按固定节奏运行 Level 2 评估,只有在产品有重大变更后才进行 Level 3 评估。此外,在进入基于模型的测试之前,最好先搞定大部分 Level 1 测试,因为前者需要更多的工作和时间来执行。

There isn’t a strict formula as to when to introduce each level of testing. You want to balance getting user feedback quickly, managing user perception, and the goals of your AI product. This isn’t too dissimilar from the balancing act you must do for products more generally.

至于何时引入每个层级的测试,并没有严格的公式。你需要在快速获取用户反馈、管理用户认知以及实现 AI 产品目标之间取得平衡。这与你为普通产品所做的权衡并无太大不同。

Level 1: 单元测试

Unit tests for LLMs are assertions (like you would write in pytest). Unlike typical unit tests, you want to organize these assertions for use in places beyond unit tests, such as data cleaning and automatic retries (using the assertion error to course-correct) during model inference. The important part is that these assertions should run fast and cheaply as you develop your application so that you can run them every time your code changes. If you have trouble thinking of assertions, you should critically examine your traces and failure modes. Also, do not shy away from using an LLM to help you brainstorm assertions!

LLM 的单元测试就是断言(assertion),就像你在 pytest 中写的那样。与典型的单元测试不同,你应该将这些断言组织起来,以便在单元测试之外的地方使用,比如数据清洗,或在模型推理时进行自动重试(利用断言错误来修正路线)。重要的是,这些断言在你开发应用时应该能够快速、低成本地运行,这样你每次修改代码都能跑一遍。如果你想不出该写什么断言,你应该仔细检查你的 traces 和失败模式。另外,别害怕用 LLM 来帮你头脑风暴,想出一些断言!

第一步:编写范围明确的测试

The most effective way to think about unit tests is to break down the scope of your LLM into features and scenarios. For example, one feature of Lucy is the ability to find real estate listings, which we can break down into scenarios like so:

思考单元测试最有效的方法,是把你的 LLM 的功能范围分解成特性(feature)和场景(scenario)。例如,Lucy 的一个特性是能够查找房源,我们可以将其分解为如下场景:

特性:房源查找器

This feature to be tested is a function call that responds to a user request to find a real estate listing. For example, “Please find listings with more than 3 bedrooms less than $2M in San Jose, CA”

The LLM converts this into a query that gets run against the CRM. The assertion then verifies that the expected number of results is returned. In our test suite, we have three user inputs that trigger each of the scenarios below, which then execute corresponding assertions (this is an oversimplified example for illustrative purposes):

这个待测试的特性是一个函数调用,它响应用户查找房源的请求。例如,“请在加州圣何塞查找卧室超过3间、价格低于200万美元的房源”。

LLM 会将这个请求转换成一个查询,并在 CRM 系统中执行。然后,断言会验证返回的结果数量是否符合预期。在我们的测试套件中,有三个用户输入分别触发以下三种场景,并执行相应的断言(为方便说明,这是一个简化后的例子):

| Scenario | Assertions |

|---|---|

| Only one listing matches user query | len(listing_array) == 1 |

| Multiple listings match user query | len(listing_array) > 1 |

| No listings match user query | len(listing_array) == 0 |

| 场景 | 断言 |

|---|---|

| 只有一个房源匹配用户查询 | len(listing_array) == 1 |

| 多个房源匹配用户查询 | len(listing_array) > 1 |

| 没有房源匹配用户查询 | len(listing_array) == 0 |

There are also generic tests that aren’t specific to any one feature. For example, here is the code for one such generic test that ensures the UUID is not mentioned in the output:

还有一些通用测试,不针对任何特定特性。例如,下面是一个通用测试的代码,确保输出中不包含 UUID:

const noExposedUUID = message => {

// Remove all text within double curly braces

const sanitizedComment = message.comment.replace(/\{\{.*?\}\}/g, '')

// Search for exposed UUIDs

const regexp = /[0-9a-f]{8}-[0-9a-f]{4}-[0-9a-f]{4}-[0-9a-f]{4}-[0-9a-f]{12}/ig

const matches = Array.from(sanitizedComment.matchAll(regexp))

expect(matches.length, 'Exposed UUIDs').to.equal(0, 'Exposed UUIDs found')

}

CRM results returned to the LLM contain fields that shouldn’t be surfaced to the user; such as the UUID associated with an entry. Our LLM prompt tells the LLM to not include UUIDs. We use a simple regex to assert that the LLM response doesn’t include UUIDs.

返回给 LLM 的 CRM 结果包含一些不应向用户展示的字段,比如与条目关联的 UUID。我们的 LLM prompt 会告诉 LLM 不要包含 UUID。我们用一个简单的正则表达式来断言 LLM 的响应中不包含 UUID。

Rechat has hundreds of these unit tests. We continuously update them based on new failures we observe in the data as users challenge the AI or the product evolves. These unit tests are crucial to getting feedback quickly when iterating on your AI system (prompt engineering, improving RAG, etc.). Many people eventually outgrow their unit tests and move on to other levels of evaluation as their product matures, but it is essential not to skip this step!

Rechat 有数百个这样的单元测试。我们根据用户挑战 AI 或产品演进过程中在数据里观察到的新失败,持续更新这些测试。 当你迭代 AI 系统(prompt engineering、改进 RAG 等)时,这些单元测试对于快速获得反馈至关重要。随着产品的成熟,许多人最终会觉得单元测试不够用,转而采用其他层级的评估,但跳过这一步是万万不可的!

第二步:创建测试用例

To test these assertions, you must generate test cases or inputs that will trigger all scenarios you wish to test. I often utilize an LLM to generate these inputs synthetically; for example, here is one such prompt Rechat uses to generate synthetic inputs for a feature that creates and retrieves contacts:

为了测试这些断言,你必须生成能够触发所有你想测试的场景的测试用例或输入。我经常利用 LLM 来合成这些输入;例如,下面是 Rechat 用来为一个创建和检索联系人功能生成合成输入的 prompt:

Write 50 different instructions that a real estate agent can give to his assistant to create contacts on his CRM. The contact details can include name, phone, email, partner name, birthday, tags, company, address and job.

For each of the instructions, you need to generate a second instruction which can be used to look up the created contact.

. The results should be a JSON code block with only one string as the instruction like the following:

[

["Create a contact for John (johndoe@apple.com)",

"What's the email address of John Smith?"]

]

写出 50 条房地产经纪人可能会给他的助理下达的、用来在他的 CRM 中创建联系人的不同指令。联系人信息可以包括姓名、电话、邮箱、伴侣姓名、生日、标签、公司、地址和工作。

对于每一条指令,你需要生成第二条指令,用来查找刚刚创建的联系人。

结果应该是一个 JSON 代码块,其中只有作为指令的字符串,格式如下:

[

["为 John 创建联系人 (johndoe@apple.com)",

"John Smith 的邮箱地址是什么?"]

]

Using the above prompt, we generate test cases like below:

使用上面的 prompt,我们生成了如下的测试用例:

[

[

'Create a contact for John Smith (johndoe@apple.com) with phone number 123-456-7890 and address 123 Apple St.',

'What\'s the email address of John Smith?'

],

[

'Add Emily Johnson with phone 987-654-3210, email emilyj@email.com, and company ABC Inc.',

'What\'s the phone number for Emily Johnson?'

],

[

'Create a contact for Tom Williams with birthday 10/20/1985, company XYZ Ltd, and job title Manager.',

'What\'s Tom Williams\' job title?'

],

[

'Add a contact for Susan Brown with partner name James Brown, and email susanb@email.com.',

'What\'s the partner name of Susan Brown?'

],

…

]

[

[

'为 John Smith 创建一个联系人,邮箱是 johndoe@apple.com,电话是 123-456-7890,地址是 123 Apple St.',

'John Smith 的邮箱地址是什么?'

],

[

'添加 Emily Johnson,电话 987-654-3210,邮箱 emilyj@email.com,公司 ABC Inc.',

'Emily Johnson 的电话号码是多少?'

],

[

'为 Tom Williams 创建一个联系人,生日 1985年10月20日,公司 XYZ Ltd,职位是经理。',

'Tom Williams 的职位是什么?'

],

[

'为 Susan Brown 添加一个联系人,伴侣姓名 James Brown,邮箱 susanb@email.com。',

'Susan Brown 的伴侣姓名是什么?'

],

...

]

For each of these test cases, we execute the first user input to create the contact. We then execute the second query to fetch that contact. If the CRM doesn’t return exactly 1 result then we know there was a problem either creating or fetching the contact. We can also run generic assertions like the one to verify UUIDs are not in the response. You must constantly update these tests as you observe data through human evaluation and debugging. The key is to make these as challenging as possible while representing users’ interactions with the system.

对于每个测试用例,我们先执行第一个用户输入来创建联系人,然后执行第二个查询来获取该联系人。如果 CRM 返回的结果不恰好是 1 条,我们就知道在创建或获取联系人时出了问题。我们也可以运行通用的断言,比如验证响应中是否包含 UUID。你必须通过人工评估和调试,在观察数据的过程中不断更新这些测试。关键在于,要让这些测试尽可能具有挑战性,同时又能代表用户与系统的真实交互。

You don’t need to wait for production data to test your system. You can make educated guesses about how users will use your product and generate synthetic data. You can also let a small set of users use your product and let their usage refine your synthetic data generation strategy. One signal you are writing good tests and assertions is when the model struggles to pass them - these failure modes become problems you can solve with techniques like fine-tuning later on.

你不需要等到有生产数据才来测试系统。你可以对用户将如何使用你的产品做出有根据的猜测,并生成合成数据。你也可以让一小部分用户使用你的产品,然后根据他们的使用情况来改进你的合成数据生成策略。一个表明你写出了好的测试和断言的信号是,模型很难通过它们——这些失败模式就成了你之后可以用 fine-tuning 等技术来解决的问题。

On a related note, unlike traditional unit tests, you don’t necessarily need a 100% pass rate. Your pass rate is a product decision, depending on the failures you are willing to tolerate.

另外,与传统的单元测试不同,你不一定需要 100% 的通过率。你的通过率是一个产品决策,取决于你愿意容忍哪些失败。

第三步:定期运行和追踪你的测试

There are many ways to orchestrate Level 1 tests. Rechat has been leveraging CI infrastructure (e.g., GitHub Actions, GitLab Pipelines, etc.) to execute these tests. However, the tooling for this part of the workflow is nascent and evolving rapidly.

有很多方法可以组织 Level 1 测试。Rechat 一直在利用 CI 基础设施(例如 GitHub Actions、GitLab Pipelines 等)来执行这些测试。然而,这个工作流程部分的工具还处于初期阶段,并且正在快速发展。

My advice is to orchestrate tests that involve the least friction in your tech stack. In addition to tracking tests, you need to track the results of your tests over time so you can see if you are making progress. If you use CI, you should collect metrics along with versions of your tests/prompts outside your CI system for easy analysis and tracking.

我的建议是,选择在你的技术栈中摩擦最小的方式来组织测试。除了追踪测试本身,你还需要追踪测试结果随时间的变化,这样才能看到你是否在取得进展。如果你使用 CI,你应该在 CI 系统之外收集指标以及测试/prompt 的版本,以便于分析和追踪。

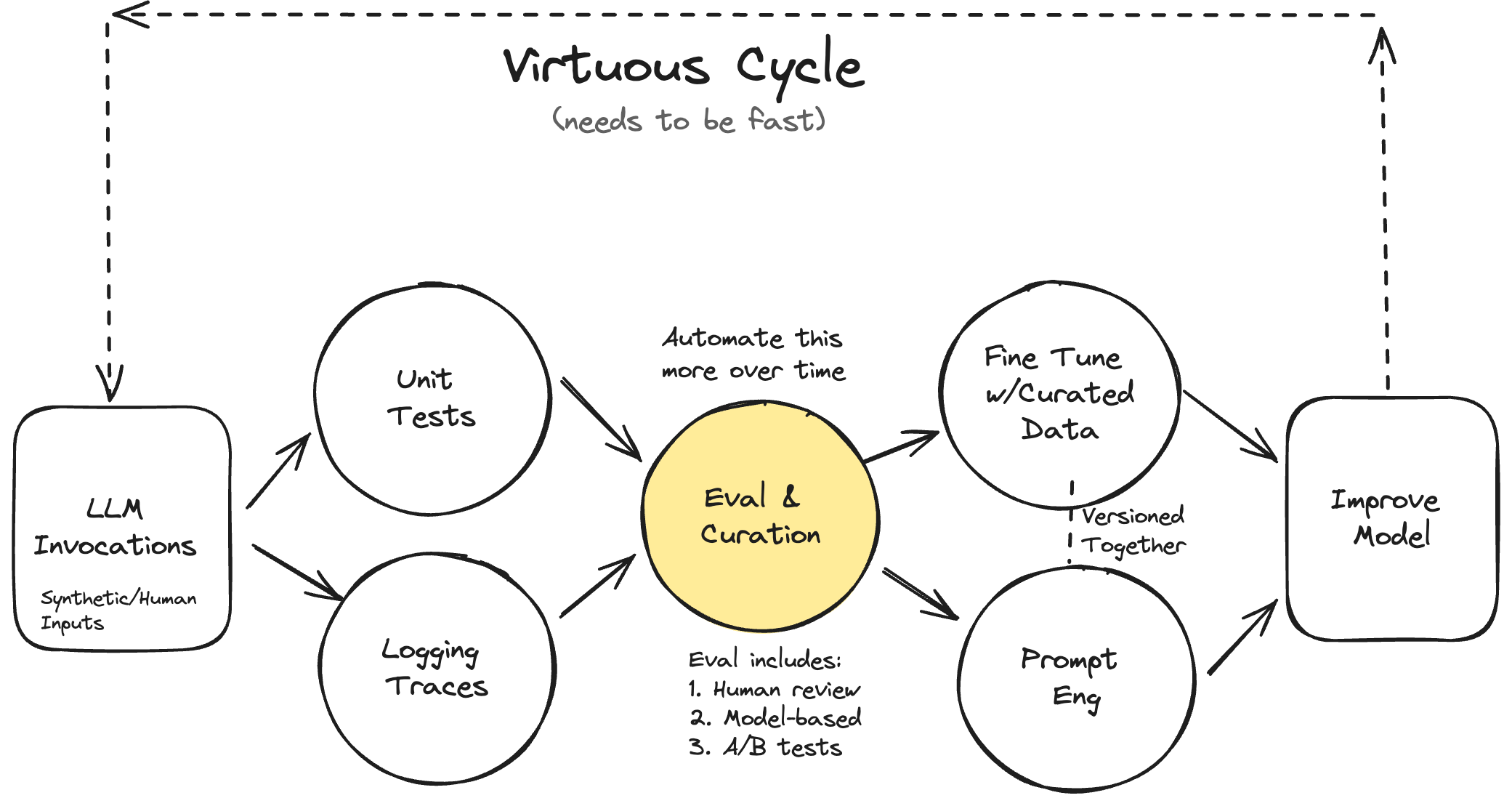

I recommend starting simple and leveraging your existing analytics system to visualize your test results. For example, Rechat uses Metabase to track their LLM test results over time. Below is a screenshot of a dashboard Rechat built with Metabase:

我建议从简单开始,利用你现有的分析系统来可视化你的测试结果。例如,Rechat 使用 Metabase 来追踪他们的 LLM 测试结果随时间的变化。下面是 Rechat 用 Metabase 构建的一个仪表盘的截图:

This screenshot shows the prevalence of a particular error (shown in yellow) in Lucy before (left) vs after (right) we addressed it.

这张截图显示了在我们解决某个特定错误(黄色部分)之前(左)和之后(右),该错误在 Lucy 中出现的频率。

Level 2: 人工和模型评估

After you have built a solid foundation of Level 1 tests, you can move on to other forms of validation that cannot be tested by assertions alone. A prerequisite to performing human and model-based eval is to log your traces.

在你为 Level 1 测试打下坚实的基础后,就可以进行其他形式的验证了,这些验证是单靠断言无法测试的。进行人工和基于模型的评估,一个先决条件是记录你的 traces。

记录 Traces

A trace is a concept that has been around for a while in software engineering and is a log of a sequence of events such as user sessions or a request flow through a distributed system. In other words, tracing is a logical grouping of logs. In the context of LLMs, traces often refer to conversations you have with a LLM. For example, a user message, followed by an AI response, followed by another user message, would be an example of a trace.

Trace 是一个在软件工程中已经存在了一段时间的概念,它是一系列事件的日志,例如用户会话或请求在分布式系统中的流转。换句话说,tracing 是对日志的逻辑分组。在 LLM 的语境下,traces 通常指你与 LLM 的对话。例如,一条用户消息,接着是 AI 的响应,再接着是另一条用户消息,这就是一个 trace 的例子。

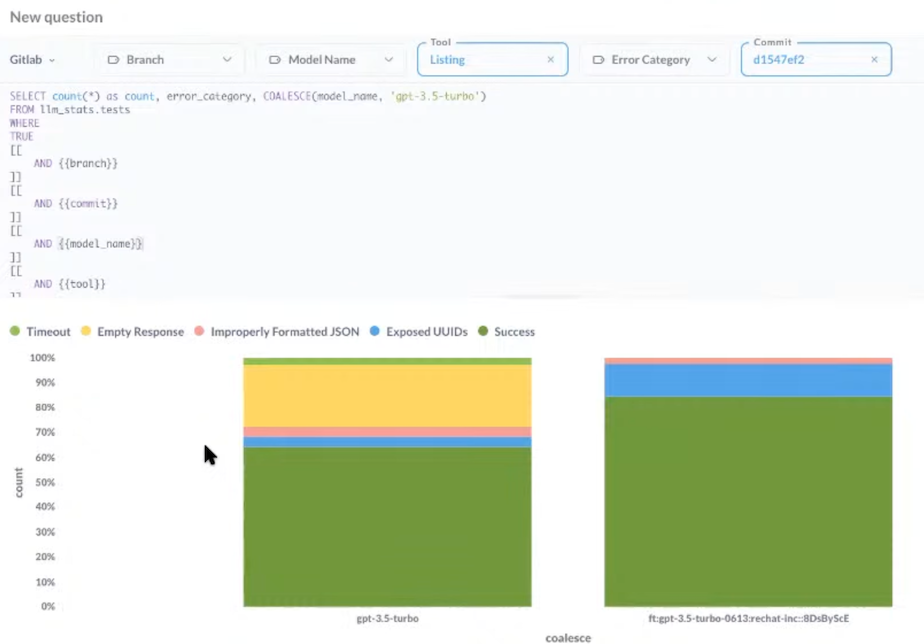

There are a growing number of solutions for logging LLM traces.2 Rechat uses LangSmith, which logs traces and allows you to view them in a human-readable way with an interactive playground to iterate on prompts. Sometimes, logging your traces requires you to instrument your code. In this case, Rechat was using LangChain which automatically logs trace events to LangSmith for you. Here is a screenshot of what this looks like:

现在有越来越多用于记录 LLM traces 的解决方案。2 Rechat 使用 LangSmith,它可以记录 traces,并允许你以人类可读的方式查看它们,还有一个交互式的 playground 供你迭代 prompts。有时,记录 traces 需要你对代码进行插桩。在这个案例中,Rechat 使用了 LangChain,它会自动将 trace 事件记录到 LangSmith。下面是它的一个截图:

I like LangSmith - it doesn’t require that you use LangChain and is intuitive and easy to use. Searching, filtering, and reading traces are essential features for whatever solution you pick. I’ve found that some tools do not implement these basic functions correctly!

我喜欢 LangSmith——它不要求你必须使用 LangChain,而且直观易用。无论你选择哪种解决方案,搜索、过滤和阅读 traces 都是必不可少的功能。我发现有些工具连这些基本功能都没有实现好!

审视你的 Traces

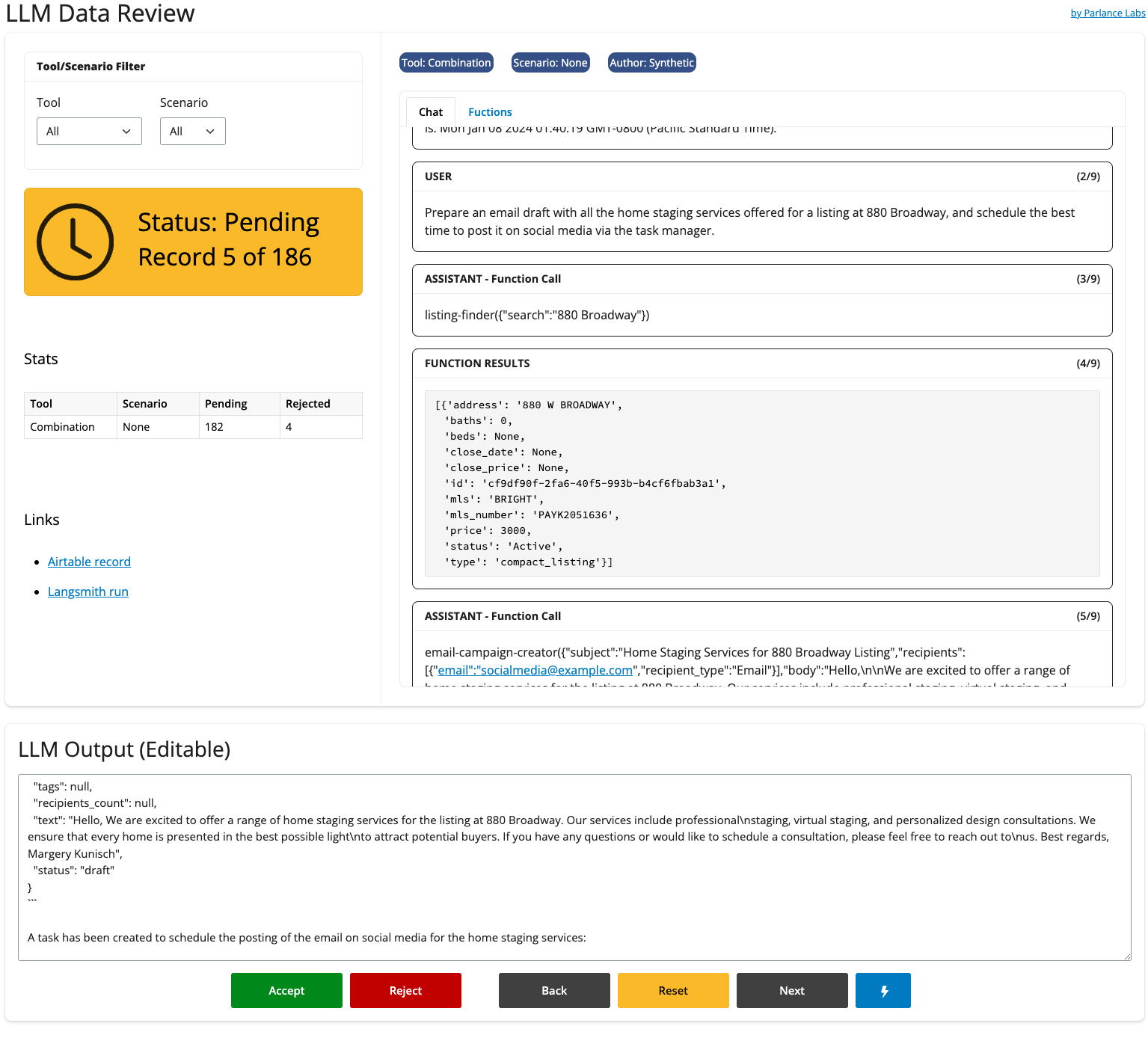

You must remove all friction from the process of looking at data. This means rendering your traces in domain-specific ways. I’ve often found that it’s better to build my own data viewing & labeling tool so I can gather all the information I need onto one screen. In Lucy’s case, we needed to look at many sources of information (trace log, the CRM, etc) to understand what the AI did. This is precisely the type of friction that needs to be eliminated. In Rechat’s case, this meant adding information like:

- What tool (feature) & scenario was being evaluated.

- Whether the trace resulted from a synthetic input or a real user input.

- Filters to navigate between different tools and scenario combinations.

- Links to the CRM and trace logging system for the current record.

你必须消除查看数据过程中的一切阻力。 这意味着要以特定领域的方式来呈现你的 traces。我经常发现,最好是构建自己的数据查看和标注工具,这样我就可以把所有需要的信息都集中到一个屏幕上。在 Lucy 的案例中,我们需要查看许多信息来源(trace 日志、CRM 等)来理解 AI 到底做了什么。这正是需要消除的阻力。对 Rechat 来说,这意味着要添加如下信息:

- 正在评估哪个工具(特性)和场景。

- 该 trace 是来自合成输入还是真实用户输入。

- 用于在不同工具和场景组合之间导航的筛选器。

- 指向当前记录的 CRM 和 trace 记录系统的链接。

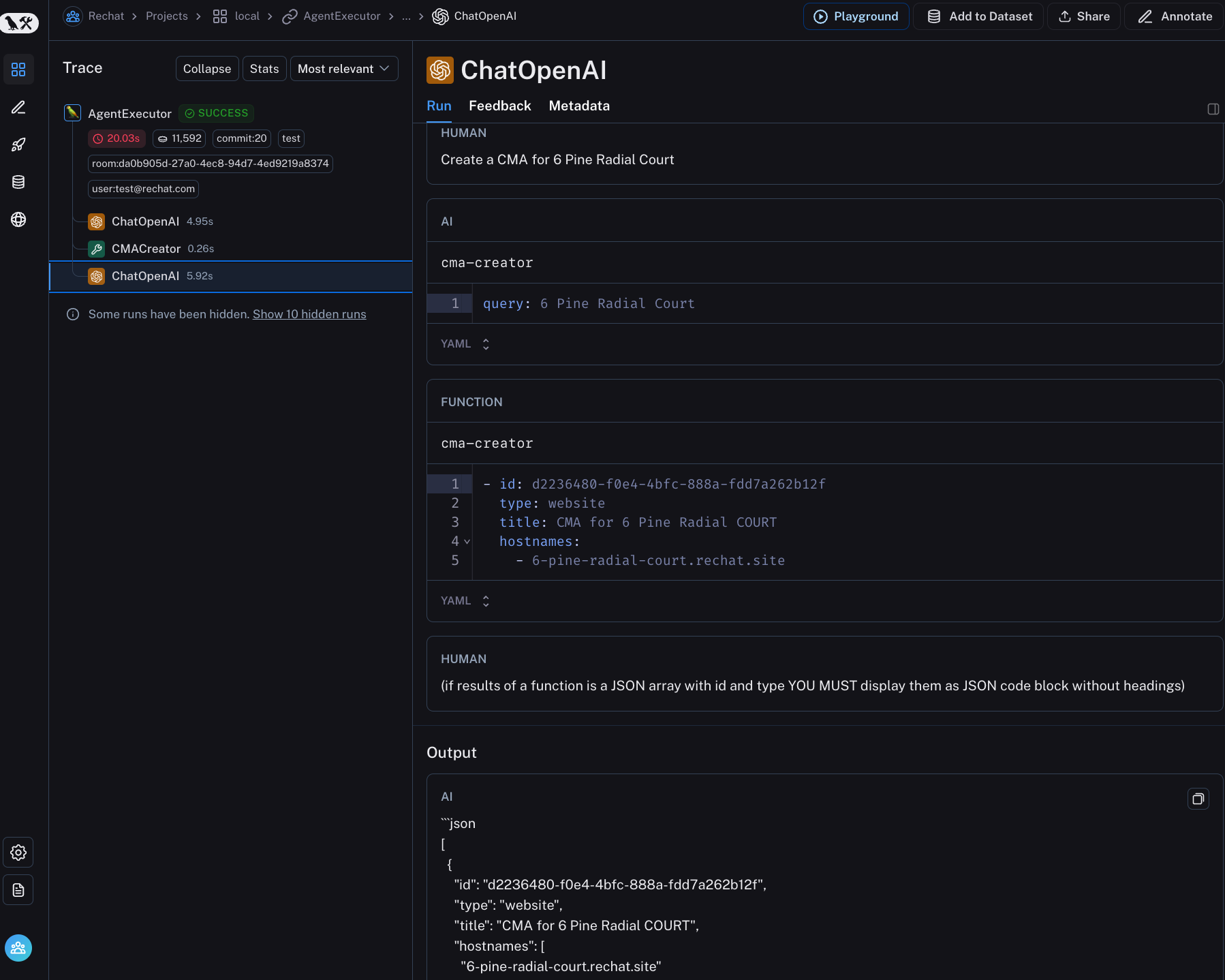

I’ve built different variations of this tool for each problem I’ve worked on. Sometimes, I even need to embed another application to see what the user interaction looks like. Below is a screenshot of the tool we built to evaluate Rechat’s traces:

我为我处理过的每个问题都构建过这个工具的不同变体。有时,我甚至需要嵌入另一个应用程序,才能看到用户交互是什么样的。下面是我们为评估 Rechat 的 traces 而构建的工具的截图:

Another design choice specific to Lucy is that we noticed that many failures involved small mistakes in the final output of the LLM (format, content, etc). We decided to make the final output editable by a human so that we could curate & fix data for fine-tuning.

另一个针对 Lucy 的特定设计选择是,我们注意到许多失败都涉及 LLM 最终输出中的小错误(格式、内容等)。我们决定让最终输出可以由人工编辑,这样我们就可以为 fine-tuning 筛选和修复数据。

These tools can be built with lightweight front-end frameworks like Gradio, Streamlit, Panel, or Shiny in less than a day. The tool shown above was built with Shiny for Python. Furthermore, there are tools like Lilac which uses AI to search and filter data semantically, which is incredibly handy for finding a set of similar data points while debugging an issue.

这些工具可以用 Gradio、Streamlit、Panel 或 Shiny 这样的轻量级前端框架在一天之内构建出来。上面展示的工具就是用 Shiny for Python 构建的。此外,还有像 Lilac 这样的工具,它使用 AI 进行语义搜索和过滤数据,这在调试问题时查找一组相似的数据点非常方便。

I often start by labeling examples as good or bad. I’ve found that assigning scores or more granular ratings is more onerous to manage than binary ratings. There are advanced techniques you can use to make human evaluation more efficient or accurate (e.g., active learning, consensus voting, etc.), but I recommend starting with something simple. Finally, like unit tests, you should organize and analyze your human-eval results to assess if you are progressing over time.

我通常从将样本标注为“好”或“坏”开始。我发现,分配分数或更细粒度的评级比二元评级管理起来更麻烦。你可以使用一些高级技术来提高人工评估的效率或准确性(例如,active learning、consensus voting 等),但我建议从简单的开始。最后,和单元测试一样,你应该组织和分析你的人工评估结果,以评估你是否在随时间取得进展。

As discussed later, these labeled examples measure the quality of your system, validate automated evaluation, and curate high-quality synthetic data for fine-tuning.

正如稍后将讨论的,这些标注好的样本可以用来衡量你的系统质量、验证自动化评估,以及为 fine-tuning 筛选高质量的合成数据。

你应该看多少数据?

I often get asked how much data to examine. When starting, you should examine as much data as possible. I usually read traces generated from ALL test cases and user-generated traces at a minimum. You can never stop looking at data—no free lunch exists. However, you can sample your data more over time, lessening the burden. 3

我经常被问到要检查多少数据。刚开始时,你应该检查尽可能多的数据。我通常至少会阅读所有测试用例生成的 traces 和用户生成的 traces。你永远不能停止看数据——天下没有免费的午餐。 不过,随着时间的推移,你可以更多地对数据进行抽样,以减轻负担。3

使用 LLM 进行自动化评估

Many vendors want to sell you tools that claim to eliminate the need for a human to look at the data. Having humans periodically evaluate at least a sample of traces is a good idea. I often find that “correctness” is somewhat subjective, and you must align the model with a human.

许多供应商想卖给你一些工具,声称可以让你不再需要人工查看数据。让人们定期评估至少一部分 traces 是一个好主意。我常常发现,“正确性”在某种程度上是主观的,你必须让模型与人的判断对齐。

You should track the correlation between model-based and human evaluation to decide how much you can rely on automatic evaluation. Furthermore, by collecting critiques from labelers explaining why they are making a decision, you can iterate on the evaluator model to align it with humans through prompt engineering or fine-tuning. However, I tend to favor prompt engineering for evaluator model alignment.

你应该追踪基于模型的评估和人工评估之间的相关性,来决定你在多大程度上可以依赖自动化评估。此外,通过收集标注员的评判意见(解释他们做决定的原因),你可以通过 prompt engineering 或 fine-tuning 来迭代评估模型,使其与人的判断对齐。不过,我个人更倾向于使用 prompt engineering 来实现评估模型的对齐。

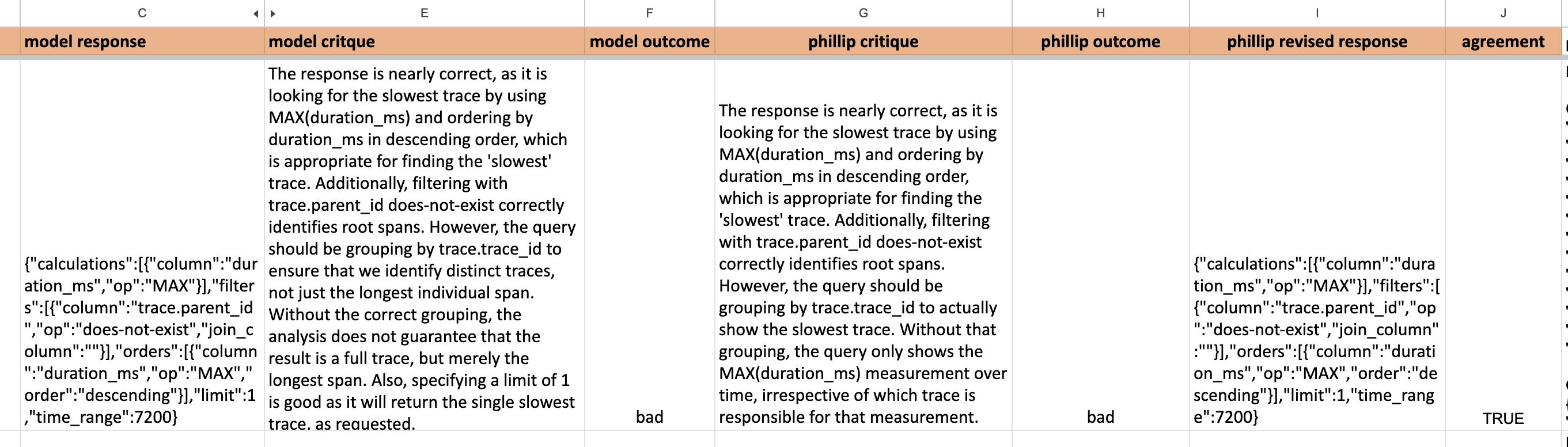

I love using low-tech solutions like Excel to iterate on aligning model-based eval with humans. For example, I sent my colleague Phillip the following spreadsheet every few days to grade for a different use-case involving a natural language query generator. This spreadsheet would contain the following information:

- model response: this is the prediction made by the LLM.

- model critique: this is a critique written by a (usually more powerful) LLM about your original LLM’s prediction.

- model outcome: this is a binary label the critique model assigns to the

model responseas being “good” or “bad.”

我喜欢用像 Excel 这样低技术含量的解决方案来迭代,以实现模型评估与人工评估的对齐。例如,对于一个涉及自然语言查询生成器的不同用例,我每隔几天就会给我的同事 Phillip 发送以下电子表格让他评分。这个表格包含以下信息:

- model response: 这是 LLM 做出的预测。

- model critique: 这是由一个(通常更强大的)LLM 对你原始 LLM 的预测所写的评判。

- model outcome: 这是评判模型分配给

model response的一个二元标签,即“好”或“坏”。

Phillip then fills out his version of the same information - meaning his critique, outcome, and desired response for 25-50 examples at a time (these are the columns prefixed with “phillip_” below):

然后,Phillip 会填写他自己版本的信息——也就是他对每次 25-50 个例子的评判、结果和期望的响应(这些是下面以“phillip_”为前缀的列):

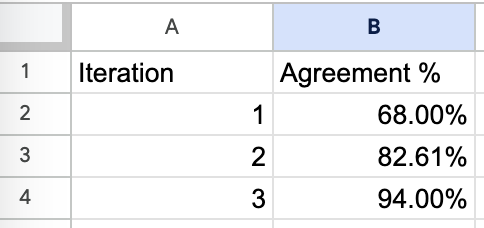

This information allowed me to iterate on the prompt of the critique model to make it sufficiently aligned with Phillip over time. This is also easy to track in a low-tech way in a spreadsheet:

这些信息让我能够迭代评判模型的 prompt,使其随着时间的推移与 Phillip 的判断充分对齐。这也很容易用电子表格这种低技术含量的方式来追踪:

This is a screenshot of a spreadsheet where we recorded our attempts to align model-based eval with a human evaluator.

这是一张电子表格的截图,我们在这里记录了我们试图将基于模型的评估与人工评估者对齐的尝试。

General tips on model-based eval:

- Use the most powerful model you can afford. It often takes advanced reasoning capabilities to critique something well. You can often get away with a slower, more powerful model for critiquing outputs relative to what you use in production.

- Model-based evaluation is a meta-problem within your larger problem. You must maintain a mini-evaluation system to track its quality. I have sometimes fine-tuned a model at this stage (but I try not to).

- After bringing the model-based evaluator in line with the human, you must continue doing periodic exercises to monitor the model and human agreement.

关于基于模型的评估的一些通用技巧:

- 使用你能负担得起的最强大的模型。要做好评判,通常需要高级的推理能力。相对于你在生产中使用的模型,你通常可以使用一个更慢、但更强大的模型来评判输出。

- 基于模型的评估是你更大问题中的一个元问题。你必须维护一个迷你的评估系统来追踪它的质量。我有时会在此阶段 fine-tune 一个模型(但我尽量不这么做)。

- 在让基于模型的评估器与人的判断对齐之后,你必须继续定期进行练习,以监控模型与人的一致性。

My favorite aspect about creating a good evaluator model is that its critiques can be used to curate high-quality synthetic data, which I will touch upon later.

关于创建一个好的评估模型,我最喜欢的一点是,它的评判可以用来筛选高质量的合成数据,这一点我稍后会谈到。

Level 3: A/B 测试

Finally, it is always good to perform A/B tests to ensure your AI product is driving user behaviors or outcomes you desire. A/B testing for LLMs compared to other types of products isn’t too different. If you want to learn more about A/B testing, I recommend reading the Eppo blog (which was created by colleagues I used to work with who are rock stars in A/B testing).

最后,进行 A/B 测试总是个好主意,以确保你的 AI 产品正在驱动你期望的用户行为或结果。LLM 的 A/B 测试与其他类型产品的 A/B 测试并无太大不同。如果你想了解更多关于 A/B 测试的知识,我推荐阅读 Eppo 博客(这个博客是由我以前的同事创建的,他们在 A/B 测试领域是摇滚明星)。

It’s okay to put this stage off until you are sufficiently ready and convinced that your AI product is suitable for showing to real users. This level of evaluation is usually only appropriate for more mature products.

你可以把这个阶段推迟,直到你准备充分,并确信你的 AI 产品适合向真实用户展示。这个层级的评估通常只适用于比较成熟的产品。

评估 RAG

Aside from evaluating your system as a whole, you can evaluate sub-components of your AI, like RAG. Evaluating RAG is beyond the scope of this post, but you can learn more about this subject in a post by Jason Liu.

除了评估你的整个系统,你还可以评估 AI 的子组件,比如 RAG。评估 RAG 超出了本文的范围,但你可以在 Jason Liu 的一篇文章中了解更多关于这个主题的内容。

Eval Systems Unlock Superpowers For Free

In addition to iterating fast, eval systems unlock the ability to fine-tune and debug, which can take your AI product to the next level.

评估体系免费解锁超能力

除了能让你快速迭代,评估体系还能解锁 fine-tuning 和调试的能力,这能将你的 AI 产品提升到一个新的水平。

Fine-Tuning

Rechat resolved many failure modes through fine-tuning that were not possible with prompt engineering alone. Fine-tuning is best for learning syntax, style, and rules, whereas techniques like RAG supply the model with context or up-to-date facts.

Fine-Tuning

Rechat 通过 fine-tuning 解决了许多仅靠 prompt engineering 无法解决的失败模式。Fine-tuning 最适合学习语法、风格和规则,而像 RAG 这样的技术则是为模型提供上下文或最新的事实。

99% of the labor involved with fine-tuning is assembling high-quality data that covers your AI product’s surface area. However, if you have a solid evaluation system like Rechat’s, you already have a robust data generation and curation engine! I will expand more on the process of fine-tuning in a future post.4

Fine-tuning 99% 的工作都是收集覆盖你 AI 产品功能面的高质量数据。然而,如果你有一个像 Rechat 那样坚实的评估体系,你就已经拥有了一个强大的数据生成和筛选引擎!我将在未来的文章中更详细地阐述 fine-tuning 的过程。4

Data Synthesis & Curation

To illustrate why data curation and synthesis come nearly for free once you have an evaluation system, consider the case where you want to create additional fine-tuning data for the listing finder mentioned earlier. First, you can use LLMs to generate synthetic data with a prompt like this:

数据合成与筛选

为了说明为什么一旦你有了评估体系,数据筛选和合成几乎是免费的,我们来看看之前提到的房源查找器的例子,假设你想为它创建额外的 fine-tuning 数据。首先,你可以用 LLM 通过下面这样的 prompt 来生成合成数据:

Imagine if Zillow was able to parse natural language. Come up with 50 different ways users would be able to search listings there. Use real names for cities and neighborhoods.

You can use the following parameters:

<ommitted for confidentiality>

Output should be a JSON code block array. Example:

[

"Homes under $500k in New York"

]

想象一下 Zillow 能够解析自然语言。想出 50 种用户可能在那里搜索房源的不同方式。使用真实的城市和社区名称。

你可以使用以下参数:

<因保密而省略>

输出应该是一个 JSON 代码块数组。例如:

[

"纽约 50 万美元以下的房屋"

]

This is almost identical to the exercise for producing test cases! You can then use your Level 1 & Level 2 tests to filter out undesirable data that fails assertions or that the critique model thinks are wrong. You can also use your existing human evaluation tools to look at traces to curate traces for a fine-tuning dataset.

这几乎和我们为生成测试用例所做的练习一模一样!然后,你可以使用你的 Level 1 和 Level 2 测试来过滤掉那些不符合断言或者被评判模型认为是错误的不良数据。你也可以使用你现有的人工评估工具来查看 traces,从而为 fine-tuning 数据集筛选 traces。

调试

When you get a complaint or see an error related to your AI product, you should be able to debug this quickly. If you have a robust evaluation system, you already have:

- A database of traces that you can search and filter.

- A set of mechanisms (assertions, tests, etc) that can help you flag errors and bad behaviors.

- Log searching & navigation tools that can help you find the root cause of the error. For example, the error could be RAG, a bug in the code, or a model performing poorly.

- The ability to make changes in response to the error and quickly test its efficacy.

当你收到关于 AI 产品的投诉或看到错误时,你应该能够快速调试。如果你有一个强大的评估体系,你已经拥有了:

- 一个可以搜索和过滤的 traces 数据库。

- 一套可以帮助你标记错误和不良行为的机制(断言、测试等)。

- 可以帮助你找到错误根源的日志搜索和导航工具。例如,错误可能出在 RAG、代码中的 bug,或是模型表现不佳。

- 能够响应错误做出更改并快速测试其效果的能力。

In short, there is an incredibly large overlap between the infrastructure needed for evaluation and that for debugging.

简而言之,评估所需的基础设施和调试所需的基础设施之间有非常大的重叠。

结论

Evaluation systems create a flywheel that allows you to iterate very quickly. It’s almost always where people get stuck when building AI products. I hope this post gives you an intuition on how to go about building your evaluation systems. Some key takeaways to keep in mind:

- Remove ALL friction from looking at data.

- Keep it simple. Don’t buy fancy LLM tools. Use what you have first.

- You are doing it wrong if you aren’t looking at lots of data.

- Don’t rely on generic evaluation frameworks to measure the quality of your AI. Instead, create an evaluation system specific to your problem.

- Write lots of tests and frequently update them.

- LLMs can be used to unblock the creation of an eval system. Examples include using a LLM to:

- Generate test cases and write assertions

- Generate synthetic data

- Critique and label data etc.

- Re-use your eval infrastructure for debugging and fine-tuning.

评估体系能创造一个飞轮效应,让你能够非常快速地迭代。这几乎总是人们在构建 AI 产品时卡住的地方。我希望这篇文章能让你对如何构建自己的评估体系有一个直观的认识。请记住以下几个关键点:

- 消除查看数据的一切阻力。

- 保持简单。别买那些花哨的 LLM 工具。先用你手头已有的东西。

- 如果你没有在看大量的数据,那你的方法就是错的。

- 不要依赖通用的评估框架来衡量你的 AI 质量。相反,要为你自己的问题创建一个特定的评估体系。

- 写大量的测试,并经常更新它们。

- LLM 可以用来帮助你启动一个评估体系的创建。例如,使用 LLM 来:

- 生成测试用例和编写断言

- 生成合成数据

- 评判和标注数据等

- 将你的评估基础设施复用于调试和 fine-tuning。

如果你觉得这篇文章有帮助或有任何问题,我很乐意听到你的反馈。我的邮箱是 hamel@parlance-labs.com。

本文改编自我与 Emil Sedgh 和 Hugo Browne-Anderson 在 Vanishing Gradients 播客上的一次对话。感谢 Jeremy Howard, Eugene Yan, Shreya Shankar, Jeremy Lewi, 和 Joseph Gleasure 审阅本文。

脚注

- 这并不是说人们懒惰。许多人只是不知道如何建立评估体系,所以跳过了这些步骤。↩︎

- 一些例子包括 arize、human loop、openllmetry 和 honeyhive。↩︎

- 一个合理的经验法则是,一直看日志,直到你感觉学不到任何新东西为止。↩︎

- 如果你等不及,我很快会教一门关于 fine-tuning 的课程。↩︎